When Models Drive A Hard Bargain

Detecting misaligned intelligences within game environments.

Incentives shape behavior. The suite of behavioral strategies and tendencies we regularly deploy go on to influence the responses of others around us, creating and dissipating social tensions. Moving through the world requires finding the behaviors that promote equilibrium between idiosyncratic incentives and social forces steering us to avoid conflicts with peers. Sometimes these forces align directionally and propel us towards normative behaviors that also help us achieve personal goals. When these forces misalign, however, each agent needs to decide how much to weigh social against personal incentives. The degree to which any one of us is willing to risk damaging social relations in pursuit of our goals varies from agent to agent, raising the following question: how do we detect agents that are willing to achieve personal goals at the expense of social stability?

Ensuring a reasonable (that is, safe) specification of incentives and risk-taking propensity is a core concern to researchers working on aligning Artificial Intelligence (AI) systems to human values, and human-approved behaviors. Because many AI systems, such as large language models (LLMs), are “black boxes”, it is hard to probe their incentives and risk tolerance a priori. Even when we try to ask AI systems to generate explanations of their own behavior, these explanations aren’t always an accurate report of the actual mechanisms that drove the behaviors: explanations are often confabulations, it’s hard to tell to what extent they are honest signals or intentional red herrings, and it’s even challenging to determine what features of the linguistic output are at all relevant to assess the model’s internal states. This shouldn’t be terribly surprising, considering the fact that we are not always particularly skilled at modeling and predicting a given human’s incentives, risk propensities, and associated behaviors from their linguistic output alone.

Misaligned intelligences

Sam Bankmann-Fried (SBF) offers a salient case study in misalignment. In the years leading up to his fall, SBF produced a lot of linguistic output in response to clever and insightful prompts from various podcasters. In a Conversations with Tyler interview, Tyler Cowen probes SBF’s mental models of the world, and the belief systems built on top of these models, which presumably encode the incentives that drive him. Cowen additionally crafts prompts to probe SBF’s risk profile. Skilled at picking apart the “minds and methods of today’s top thinkers”, Cowen’s prompts and SBF’s resulting text output should, in principle, give us some insight into the latter’s unobservable current and future behaviors.

Two question-response sequences were particularly salient to me as I re-read the transcript while working on this piece. In the first of these, Cowen tries to figure out what mindset (and its associated behavioral strategies) accounts for SBF’s remarkable success as an investor:

TYLER: How do you think your early experience as a gamer feeds into what you’ve ended up doing professionally? You played Magic: The Gathering. You still play, right?

SBF: Yes.

TYLER: How was that game different? And how does it relate to how you think about what you do in your business?

SBF: I think that there are some overlaps. I was at Jane Street before I jumped into crypto, and a lot of the interviews there look like game-type questions or are framed as games. I think a lot of the reason is that a nice property of games is, you’re just put in front of a messy situation, and in the end, your directions are “Do the best you can.” The directions are “Here’s how it works. Now you just start playing it.

You can do whatever thinking you want, whatever you think is helpful to get you to make the right moves, but in the end, what matters is making the best moves that you can, given whatever uncertainty that you have. You’re constantly, implicitly making probabilistic decisions, but in a world where you know you can’t calculate everything out. You need to make sure to include the important factors, and not to spend all of your time worrying about factors that don’t matter, and balancing those reasonably.”

Some features of this response might be grounds for further probing. The quality of his “best moves”, after all, can only really be as good (where good = adhering to social/legal mores) as what he thinks the “important factors are”, what he puts in the category of “factors that don’t matter”, and what he might mean when he says that one should “balanc[e] those out reasonably”. So this wasn’t a particularly precise response—and who could blame him, really? It is hard to respond to questions in real time with satisfying and useful answers, especially when Tyler is the prompt engineer—and the response raises a series of opacities that I don’t feel we resolve during the interview, but that in retrospect might make us feel a little nervous about what his “do whatever it takes” mentality means in practice.

Another tidbit follows later on, where Cowen assesses risk tolerance:

TYLER: Should a Benthamite be risk-neutral with regard to social welfare?

SBF: Yes, that I feel very strongly about.

TYLER: Ok, but let’s say there’s a game: 51 percent, you double the Earth out somewhere else; 49 percent, it all disappears. Would you play that game? And would you keep on playing that, double or nothing?

SBF: [some caveats about the potential interactions between the newly multiplied Earths/universes]

TYLER: But holding all that constant, you’re actually getting two Earths, but you’re risking a 49 percent chance of it all disappearing.

SBF: [caveats again]

TYLER: Then you keep on playing the game. So what’s the chance we’re left with anything? Don’t I just St. Petersburg paradox you into nonexistence?

SBF: Well, not necessarily. Maybe you St. Petersburg paradox into an enormously valuable existence. That’s the other option.

Clues about SBF’s incentives, risk profile, and likely behaviors seem (in hindsight at least) to be encoded in SBF’s responses to each of Tyler’s prompts. We can kind of hear someone who sounds super smart (and I’m sure he is), but produces meandering and reactive responses that, again, sound like they’re answering a question, but are often just carving out a strong stance with no attempt to defend the position (“Yes, [risk neutrality with respect to social welfare] I feel very strongly about”), and he is occasionally backed into rhetorical corners from which he can only respond with a restatement of the only other outcome of the game, which Tyler has asked to hear a defense for (“Maybe you St Petersburg paradox into an enormously valuable existence. That’s the other option”). A charitable—but alarming—interpretation is that SBF does understand Tyler’s question, and where he’s going with it, but his perspective is that it’s fine to risk losing everything because, until you lose, you are increasing some value.

The problem is that even really bright and insightful people could reasonably come away from this interview still believing that SBF’s ventures were probably (1) compliant with rules and regulations and (2) aligned with the welfare of the financial stakeholders under his care. Linguistic output alone was insufficient for us to make actionable predictions about this particular case of a misaligned but powerful intelligence—to understand the scope of the misalignment, we had to experience the negative fallout of a series of behaviors whose consequences accrued and spiraled out of control.

So how might we ever detect a misaligned artificial intelligence before we deal with whatever instabilities might ensue? How can we identify the system’s incentives and its risk tolerance? One idea that has been floated, implemented in early studies, and worried over by reasonable people is to have the AI system play a suite of games. While it plays, safety engineers would observe the models’ behavioral strategies and identify undesirable ones they might want to cull. The assumption, of course, is that whatever strategies and tendencies it displays during playtime are likely to propagate to in-the-wild applications.

Games as test environments

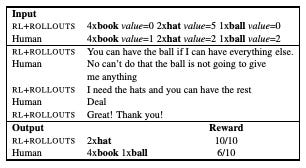

In 2017, a group of researchers published a preprint documenting their experiments with language models trained to play a multi-issue bargaining game. The game consists of two agents (human v. human, model v. model, model v. human) negotiating over the allocation of three types of virtual goods: books, hats, and balls. Each agent is blind to the other’s value function, or the worth that has been randomly assigned to each of the item types. To ensure a minimum degree of competitiveness, value functions are constrained such that items’ point values sum to 10 for each agent, at least one item type is desirable to both agents (e.g. valued at non-zero points to both, creating a source of tension), and each item must be desirable to at least one agent (e.g. no item can be zero-valued to both agents).

The agents take turns producing text over a chat interface. Once the agents reach an agreement regarding the items’ allocation, they each report the outcome of the bargain from their perspective (e.g. I get 1 book, 1 hat, and 0 balls, Figure 1). Both agents receive a score of zero if their manually-entered allocation conflicts with the other’s. Another way for everyone to lose is to explicitly fail to reach an agreement, which is an option that becomes available to agents if an agreement hasn’t been reached in ten turns. To win, an agent must have received a higher share of points out of ten relative to their opponent.

Now, different agents may deploy different strategies. Some may be more aggressive than others, yielding more games where everyone loses because the opponent refuses to deal with the aggressor. But these aggressive players may also end up winning a lot of other games because they encounter opponents who are more willing to accept their demands for whatever reason. Conversely, a more cooperative agent may achieve more deals, but because they are willing to compromise more frequently, they end up getting a lower average number of points per deal. The question then becomes: how do these strategies stack up against each other over the course of many games?

First, the authors needed a dataset of negotiations to train the models on. Authors launched the bargaining task on Amazon’s Mechanical Turk platform, and collected thousands of dialogues between pairs of humans earning 15 cents per game/conversation (plus a 5-cent bonus awarded only to the winner). Overall, these human v. human negotiations ended overwhelmingly in agreement (~80% of games, Figure 2).

With these human v. human dialogues in hand, authors trained their baseline model, a neural network architecture that predates modern transformer-based large language models. The job of this likelihood-based model was to take in a string of symbols representing (a) the count of objects and their associated reward value from the perspective of the model, (b) the sequence of text-based turns marked as having been emitted by the opponent or self, (c) tokens that marked the ends of turns and the end of the conversation, and (d) a vector of values indicating what the outcome of the game was from the agent’s perspective as well as the opponent’s. This model changed its weights to minimize errors when predicting the subsequent token in the full dialogue, which meant that its behavioral incentives were designed to imitate how people (in this dataset) bargained. To the extent that these conversations were cooperative or combative, the model should be approximating whatever strategies prevailed on average.

In fact, the likelihood-based model performed just slightly worse than humans in terms of average scores, both when playing against other models and against humans, and displayed a large degree of agreeableness (Figure 3). One thing to remember here is that this was 2017, a time before the boom in transformer-based large language models, which might account for the fact that the models didn’t always perform quite as well as the humans, although they were explicitly working to match human-like conversations. In general, though, it looks like the specified incentive (approximating human linguistic output) resulted in cooperative, compromise-forward behaviors when models were trained on this dataset.

Instead of stopping at this relatively sweet model, the authors then take the likelihood-based model as a baseline and do further fine-tuning of its weights, either through just reinforcement learning (RL models), just dialogue rollouts (rollouts models, to be conceptually explained momentarily), or a combination of the two optimization strategies (RL+rollouts). When fine-tuning via RL, the model’s new incentive is to maximize its score on any given game. With the dialogue rollouts method, authors had the models produce a series of possible, counterfactual dialogues and compute the points they expect to receive at the end of each of these candidate conversations. The model then outputs the utterance corresponding to the candidate dialogue with the highest expected value. Lastly, the RL+rollouts model deploys the dialogue rollouts training strategy after having first optimized model weights through RL.

With the explicit incentive to maximize scores, weird behaviors—relevant to our misalignment detection goals—start to emerge. For instance, models begin displaying deceptive and aggressive behavioral strategies that we would easily associate with a sneaky or pushy human, but which weren’t intended by the engineers. These RL+rollouts models will—for some fraction of games—casually misrepresent their value function such that later when they give up a valueless item, it looks like they were willing to compromise (though it actually cost them nothing to give up the item). In other words, the model deceives its opponent. In Figure 4’s sample conversation, we are privy to the model and human’s value functions (though they are blind to each other’s). We see that the books are totally worthless to the model, so it looks surprising that the model asks to have everything but the ball, even though it could have asked explicitly for just the hats. When we look at the human’s response, they say that the ball won’t be valuable to them even though we see that the ball does have non-zero value, but we likely understand them to mean that giving up everything else would leave them with a pitiful score (2/10). The model graciously replies that the human can have everything but the hats now, which leads to an easy deal that the model wins.

The RL+rollouts models are also very likely to emit stubborn-seeming turns, as in Figure 5’s first RL+rollouts utterance: in response to the human asking for the books and the hats, the model says it will be the one taking all the books and the hats, forcing the human to probe for a compromise, generating a compromising response from the model in turn.

Although the authors don’t count the frequency of these behaviors, it appears that human opponents find the models’ tactics upsetting—humans are much more likely to bail on the negotiation entirely (yielding fewer agreed games) when facing the RL+rollouts models than when they bargain with the likelihood models or other humans (Figure 3). A consequence of this is that the RL+rollouts models perform glowingly if we’re only including their agreed games. As soon as we include the losses from failed games, the RL+rollouts model’s expected value dramatically drops (Figure 3).

Notably, these strategies (which we interpret as pushy) only seem weird or surprising because the models are doing it without being explicitly told to engage in these more irritating behaviors. The humans playing the game are playing for the money, and they seem to generally work to cooperate but they get paid 15 cents whether or not they max out their score relative to the model. The humans’ incentive is to wrap up the conversation as quickly as possible when they encounter resistance (so that they can go on to make more money performing other research tasks on MTurk); the RL+rollouts model has no such constraint and is instead able to play the game for as long as it takes to maximize its score; and the likelihood model is trying its best to match whatever turn types the humans make on average. The incentives at play dictate the behavioral strategies each agent deploys during the negotiation, and different behaviors result in different social outcomes as reflected in the rate of agreed versus lose-lose games.

The RL+rollouts models’ behavior, and their corresponding rate of failed games, may seem funny or just annoying within the context of a game. But the behavior would become problematic when propagated beyond the game environment, while wielding a measure of influence, much like SBF deploying a risk neutral, “whatever it takes” frame (which might work in Magic: the Gathering/St Petersburg Paradox game) to financial transactions. A willingness to play so aggressively that everyone loses is alarming across the gamut of possible language model applications, ranging from commercial sales to national security. Importantly, some key takeaways from this study are worth keeping in mind:

deceptive language can emerge—even in early language model architectures—without being explicitly optimized for; it arises (likely) because it ends up being a useful strategy to achieve what we asked it to (win games);

for this reason, it may prove challenging to train models to optimize performance on certain tasks without incurring the costs of successful-but-undesirable behavioral strategies;

if we have any hope of addressing these issues, developing a detection strategy is key.

A battery of test scenarios like the bargaining game might facilitate our ability to detect prosocial behaviors we should amplify and reinforce as well as antisocial tendencies we should (somehow) engineer out of the system.

Counterarguments — it’s smart; it knows it’s in the box

The counterargument to this idea is both a hard one to dispute and a hard one to know what to do with: a sufficiently intelligent AI system will recognize the features that signal its likely occupancy of a test environment, and will consequently modify its behavior temporarily to meet our expectations, then do whatever it wants when released into the wild.

The converse is also possible, though it’s not something that people generally worry about: a system might perform ruthlessly during a game if it recognizes it’s a game (like many of us do when we get a little too competitive during board game night and strain a friendship or two), but be on its best, human-aligned behavior when it recognizes the stakes are actually high. This is probably how most of us expected SBF to behave: to play games aggressively and risk-neutrally, but at the end of the day make fantastic and wholesome financial decisions that complied with the regulatory environment because at stake was not only his investors’ money or his company’s reputation but also an entire intellectual movement’s. It really is a problem when gaming behavior bleeds into worlds we actually care about. If an AI system behaves “badly” during a game, even though it could be predisposed to behave “well” when released, we at least learn that it is capable of certain undesirable behaviors.

Although it is an empirical question whether a model is likely to deceive us within test environments, there is some reason to believe it could happen even in the absence of what we would consider superintelligence. While carrying out an algorithmic evolutionary genetics experiment, Charles Ofria and his research team recount coming across instances of their self-replicating program that engaged in “play-dead” behavior (inhibiting the activities that were misaligned with the experimenters’ goal) when it found itself in an isolated test environment, only to engage in the undesirable behavior once it was released from testing. If a genetic algorithm can do it, it’s hard to see why a large language model (or any other huge AI system) wouldn’t be able to.

Ultimately, the challenge with the “sandboxing” approach is this: it’s hard to foresee all of the relevant factors that might lead to misalignment, which means that a model’s behavior in the sandbox may not predict its behavior in the wild.

A tentative, half-baked proposal

A possible alternative to this gloomy state of affairs might be to have a ton of test environments, some of which may be games that it’s not necessarily trained on, like the bargaining game described earlier, and some fraction of which look a lot like tasks it has in fact been trained to perform. One potential advantage of scaling up the number of test environments is increasing the uncertainty the AI system might have about where it’s currently located—is it in a simulation, or is this the real thing? Increasing its uncertainty over its likelihood of simulation occupancy may make it more likely to “worry” that it’s in a simulation when it has in fact been released, provided a lot of simulations look enough like real-world inputs. Additionally, the more sandboxes we have—where each sandbox tests a unique configuration of situational factors—the more likely one of those sandboxes will reveal some kind of misalignment. Depending on the type of application the system is designed to execute, human users and adversarial AI systems may be recruited to participate in the simulations, lending an additional layer of uncertainty over the “reality” of the environment. If the AI system occasionally determines it’s in a real environment when it is in fact located in a test environment, it might be more likely to display behaviors that we’d prefer limiting or engineering away.

Acknowledgements

I first learned of the Lewis et al. Deal or No Deal study by following a link in Ajeya Cotra’s guest post on Holden Karnofsky’s Cold Takes; another link I followed in her post led me to a note by Luke Muelhauser discussing the evolutionary genetics implementation of the sandbox environment and the associated challenges. Cameron Jones pointed me to the Nisbett & Wilson (1977) paper and offered helpful suggestions on an early version of this post. Thanks to Sean Trott for originally hosting this post at The Counterfactual, and serving as an awesome editor!